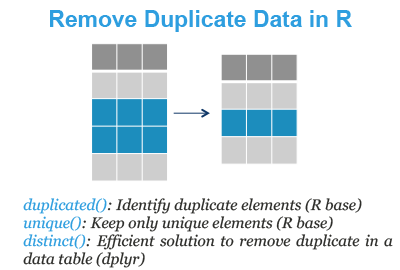

This tutorial describes how to identify and remove duplicate data in R.

You will learn how to use the following R base and dplyr functions:

- R base functions

duplicated(): for identifying duplicated elements andunique(): for extracting unique elements,

- distinct() [dplyr package] to remove duplicate rows in a data frame.

Contents:

Required packages

Load the tidyverse packages, which include dplyr:

library(tidyverse)Demo dataset

We’ll use the R built-in iris data set, which we start by converting into a tibble data frame (tbl_df) for easier data analysis.

my_data <- as_tibble(iris)

my_data## # A tibble: 150 x 5

## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## <dbl> <dbl> <dbl> <dbl> <fct>

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## # ... with 144 more rowsFind and drop duplicate elements

The R function duplicated() returns a logical vector where TRUE specifies which elements of a vector or data frame are duplicates.

Given the following vector:

x <- c(1, 1, 4, 5, 4, 6)- To find the position of duplicate elements in x, use this:

duplicated(x)## [1] FALSE TRUE FALSE FALSE TRUE FALSE- Extract duplicate elements:

x[duplicated(x)]## [1] 1 4- If you want to remove duplicated elements, use !duplicated(), where ! is a logical negation:

x[!duplicated(x)]## [1] 1 4 5 6- Following this way, you can remove duplicate rows from a data frame based on a column values, as follow:

# Remove duplicates based on Sepal.Width columns

my_data[!duplicated(my_data$Sepal.Width), ]## # A tibble: 23 x 5

## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## <dbl> <dbl> <dbl> <dbl> <fct>

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## # ... with 17 more rows! is a logical negation. !duplicated() means that we don’t want duplicate rows.

Extract unique elements

Given the following vector:

x <- c(1, 1, 4, 5, 4, 6)You can extract unique elements as follow:

unique(x)## [1] 1 4 5 6It’s also possible to apply unique() on a data frame, for removing duplicated rows as follow:

unique(my_data)## # A tibble: 149 x 5

## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## <dbl> <dbl> <dbl> <dbl> <fct>

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## # ... with 143 more rowsRemove duplicate rows in a data frame

The function distinct() [dplyr package] can be used to keep only unique/distinct rows from a data frame. If there are duplicate rows, only the first row is preserved. It’s an efficient version of the R base function unique().

Remove duplicate rows based on all columns:

my_data %>% distinct()## # A tibble: 149 x 5

## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## <dbl> <dbl> <dbl> <dbl> <fct>

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## # ... with 143 more rowsRemove duplicate rows based on certain columns (variables):

# Remove duplicated rows based on Sepal.Length

my_data %>% distinct(Sepal.Length, .keep_all = TRUE)## # A tibble: 35 x 5

## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## <dbl> <dbl> <dbl> <dbl> <fct>

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## # ... with 29 more rows# Remove duplicated rows based on

# Sepal.Length and Petal.Width

my_data %>% distinct(Sepal.Length, Petal.Width, .keep_all = TRUE)## # A tibble: 110 x 5

## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## <dbl> <dbl> <dbl> <dbl> <fct>

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## # ... with 104 more rowsThe option .kep_all is used to keep all variables in the data.

Summary

In this chapter, we describe key functions for identifying and removing duplicate data:

- Remove duplicate rows based on one or more column values:

my_data %>% dplyr::distinct(Sepal.Length) - R base function to extract unique elements from vectors and data frames:

unique(my_data) - R base function to determine duplicate elements:

duplicated(my_data)

Recommended for you

This section contains best data science and self-development resources to help you on your path.

Books - Data Science

Our Books

- Practical Guide to Cluster Analysis in R by A. Kassambara (Datanovia)

- Practical Guide To Principal Component Methods in R by A. Kassambara (Datanovia)

- Machine Learning Essentials: Practical Guide in R by A. Kassambara (Datanovia)

- R Graphics Essentials for Great Data Visualization by A. Kassambara (Datanovia)

- GGPlot2 Essentials for Great Data Visualization in R by A. Kassambara (Datanovia)

- Network Analysis and Visualization in R by A. Kassambara (Datanovia)

- Practical Statistics in R for Comparing Groups: Numerical Variables by A. Kassambara (Datanovia)

- Inter-Rater Reliability Essentials: Practical Guide in R by A. Kassambara (Datanovia)

Others

- R for Data Science: Import, Tidy, Transform, Visualize, and Model Data by Hadley Wickham & Garrett Grolemund

- Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems by Aurelien Géron

- Practical Statistics for Data Scientists: 50 Essential Concepts by Peter Bruce & Andrew Bruce

- Hands-On Programming with R: Write Your Own Functions And Simulations by Garrett Grolemund & Hadley Wickham

- An Introduction to Statistical Learning: with Applications in R by Gareth James et al.

- Deep Learning with R by François Chollet & J.J. Allaire

- Deep Learning with Python by François Chollet

you are missing a comma here after the row x[duplicated(x)]. It should be like this x[duplicated(x), ]

x is a vector, so you don’t need to add a comma

Any way, your comment was very useful to me, ’cause I am working with a data frame (in my case). Tks a lot.

Iam using Data table , and also very useful !!

Thanks !!

Thank you for your positive feedback. Highly appreciated!

Error: Length of logical index vector for `[` must equal number of columns (or 1):

* `.data` has 1348 columns

* Index vector has length 1191

please, clarify your question and provide reproducible example

Thanks, it is a simple and useful tutorial.

Thank you Juliàn for your feedback!

Que excelente tutorial, simple y sencillo, pero en el punto. Lo he utilizado varias veces.

Thank you for your positive feedback!

hi I’m trying to KEEP ONLY duplicate rows base on a column. I first tested for unique;

unique(Jan_19)

# A tibble: 178,492 x 22

then the number of duplicates base on my CON column

Jan_19[duplicated(Jan_19$CON), ]

# A tibble: 251 x 22

then tried to drop the rows where CON was not duplicated

Jan_19 %>% !distinct(CON, .keep_all = TRUE)

Error in distinct(CON, .keep_all = TRUE) : object ‘CON’ not found

any advise? Thanks for the codes, quite useful

You can use the following R code:

Kassambara,

the lesson “Identify and Remove Duplicate Data in R” was extremely helpful for my task,

Question:

two dataframes like “iris”, say iris for Country A and B,

the dataframes are quite large, up to 1 mio rows and > 10 columns,

I’d like to check, whether a row in B contains the same input in A.

E.g. in ‘iris’ row 102 == 143;

let’s assume row 102 is in iris country_A and row 143 in iris…._B. How could I identify any duplicates in these two DF’s?

I searched in stackexchange but didn’t find any helpful solution.

Thks

Now I have a slightly harder task:

what to do if I want to remove only subsequent, immediate duplicates, but if they are divided by something I want to preserve them.

Example: you have a data frame with object id, time and the place where it happened:

df <- data.frame(id=c(1,1,1,2,2,2), time=rep(1:3, 2), place=c(1,2,1,1,1,2))

and I would like to extract paths of these object – for example object 1 was at place 1, then 2, then back to 1 – and I would like to preserve that in data so that later I can see that it moved from 1 to 2 and then from 2 to 1

any ideas?

If you want to keep distinct rows based on multiple columns, you can go as follow:

You are always on point! A quick one…

What are the major check points in data management? I know there are duplicates, missing data, ….

Thank you for your feedback, highly appreciated. There is also Outlier identifications

How to permanently remove the duplicates? because once i used this function, it acts only like a filter. but the original table stays intact.

To overwrite, your original file, type this: